Design a border Surveillance System based on Autonomous Unmanned Aerial Vehicles (UAV)

DOI:

https://doi.org/10.58564/IJSER.2.4.2023.119Keywords:

Autonomous Drone, border surveillance system, Unmanned Aerial Vehicle (UAV), YOLO algorithm.Abstract

After the spread of autonomous driving systems in cars, the next step is the development of autonomous drone systems. This will have an application in many military and civil fields, including surveillance and photography instead of satellites, as well as in search and rescue, dissemination, aid and distribution of goods, as it can cover large areas and save effort and human cost.

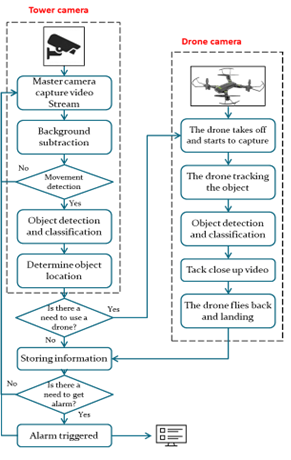

The goal of this paper is to discuss using autonomous drones to design a border surveillance system. Where the current surveillance systems were studied to discuss the possibility of using drones as an alternative to long-range cameras, and a design proposal was presented that works on three different levels of surveillance systems. The possibility of controlling the drone through a single-board computer that is installed on the control tower with the base of the charging station for the drone was also discussed.

In order to achieve the required balance between the speed of controlling the drone and the accuracy of object detection and classification, the concept of parallel processing was applied with the fast algorithms for tracking and detection (contour detection) and the high accuracy deep learning model (YOLOv8n) for detection and classification , which was trained on a customized dataset to improve the accuracy of object detection and classification in images taken from different view angles of the drone.

After testing the experimental model of the system, the results showed a good enhancement in the quality of the captured images, as well as a solution to the problem of occlusion or blurring of objects in the image.

Also, the results confirmed the possibility of relying on a single-board computer in processing images and controlling the drone to track moving targets in a way that was not possible by relying on transferring images to remote central processing.

References

M. Lamine Laouira, “Solutions for the implementation of a sensors network for border surveillance.” [Online]. Available: https://theses.hal.science/tel-03735980

N. Bhadwal, A. Shukla, V. Madaan, A. Kakran, and P. Agrawal, “Smart Border Surveillance System using Wireless Sensor Network and Computer Vision.” In 2019 international conference on Automation, Computational and Technology Management (ICACTM) (pp. 183-190). IEEE. DOI: https://doi.org/10.1109/ICACTM.2019.8776749

A. Aabid et al., “Reviews on design and development of unmanned aerial vehicle (drone) for different applications,” Journal of Mechanical Engineering Research and Developments (Scopus) , vol. 45, No. 2, pp. 53–69, Accessed: Apr. 22, 2023. [Online]. Available: https://jmerd.net/Paper/Vol.45,No.2(2022)/53-69.pdf

J. Kaur and W. Singh, “Tools, techniques, datasets and application areas for object detection in an image: a review,” Multimedia Tools and Applications (Scopus), vol. 81, No. 27, pp. 38297–38351, Nov. 2022, doi: 10.1007/s11042-022-13153-y. DOI: https://doi.org/10.1007/s11042-022-13153-y

P. Chen, Y. Dang, R. Liang, W. Zhu, and X. He, “Real-Time Object Tracking on a Drone with Multi-Inertial Sensing Data,” IEEE Transactions on Intelligent Transportation Systems (Scopus), vol. 19, No. 1, pp. 131–139, Jan. 2018, doi: 10.1109/TITS.2017.2750091. DOI: https://doi.org/10.1109/TITS.2017.2750091

R. O. Reis, I. Dias, and W. Robson Schwartz, “Neural Network Control for Active Cameras Using Master-Slave Setup.” [Online]. Available: https://www.axis.com/en-us/support/developer-support/vapix

P. Somaldo, F. A. Ferdiansyah, G. Jati, and W. Jatmiko, “Developing Smart COVID-19 Social Distancing Surveillance Drone using YOLO Implemented in Robot Operating System simulation environment,” in IEEE Region 10 Humanitarian Technology Conference, R10-HTC, Institute of Electrical and Electronics Engineers Inc., Dec. 2020. doi: 10.1109/R10-HTC49770.2020.9357040. DOI: https://doi.org/10.1109/R10-HTC49770.2020.9357040

M. Sneha et al., “An effective drone surveillance system using thermal imaging,” Proceedings of the International Conference on Smart Technologies in Computing, Electrical and Electronics, ICSTCEE 2020, pp. 477–482, Oct. 2020, doi: 10.1109/ICSTCEE49637.2020.9277292. DOI: https://doi.org/10.1109/ICSTCEE49637.2020.9277292

M. Shahzad Alam and S. K. Gupta, “Cost-effective real-time aerial surveillance system using edge computing,” in Lecture Notes in Civil Engineering, (Scopus) Springer, 2020, pp. 289–299. doi: 10.1007/978-3-030-37393-1_25. DOI: https://doi.org/10.1007/978-3-030-37393-1_25

Y.-H. ; Liao, J.-G. Juang, Y.-H. Liao, and J.-G. Juang, “Real-Time UAV Trash Monitoring System,” Applied Sciences 2022 (Scopus) , Vol. 12, Page 1838, vol. 12, no. 4, p. 1838, Feb. 2022, doi: 10.3390/APP12041838. DOI: https://doi.org/10.3390/app12041838

Mohamed Lamine Laouira, “Solutions for the implementation of a sensors network for border surveillance. PhD Thesis. USTHB-Alger | Request PDF,” 2022. https://www.researchgate.net/publication/362325635 Solutions_for_the_implementation of_a sensors network_for_border_surveillance (accessed Aug. 02, 2023).

J. Terven and D. Cordova-Esparza, “A Comprehensive Review of YOLO: From YOLOv1 to YOLOv8 and Beyond,” Apr. 2023, [Online]. Available: http://arxiv.org/abs/2304.00501

J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You Only Look Once: Unified, Real-Time Object Detection”, In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 779-788). Accessed: Jul. 03, 2023. [Online]. Available: http://pjreddie.com/yolo/

J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You Only Look Once: Unified, Real-Time Object Detection,” Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 2016-December, pp. 779–788, Jun. 2015, doi: 10.1109/CVPR.2016.91. DOI: https://doi.org/10.1109/CVPR.2016.91

D. Thuan, “Do Thuan EVOLUTION OF YOLO ALGORITHM AND YOLOV5: THE STATE-OF-THE-ART OBJECT DETECTION ALGORITHM” Bachelor’s Thesis. Oulu University of Applied Sciences 2021.

B. Xu, N. Wang, T. Chen, and M. Li, “Empirical Evaluation of Rectified Activations in Convolutional Network,” May 2015, [Online]. Available: http://arxiv.org/abs/1505.00853

J. Redmon and A. Farhadi, “YOLO9000: Better, faster, stronger,” in Proceedings - 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, 2017. doi: 10.1109/CVPR.2017.690. DOI: https://doi.org/10.1109/CVPR.2017.690

J. Redmon, A. F. ön baskı ArXiv:1804.02767, and U. 2018, “YOLOv3: An Incremental Improvement,” Arxiv.Org, 2018, Accessed: Jul. 18, 2023. [Online]. Available: https://click.endnote.com/viewer?doi=arxiv%3A1804.02767& token=WzQwNzM1MTUsImFyeGl2OjE4MDQuMDI3NjciXQ.lSgGK5sHlR34I_uXzlEKQFsXoKk

K. He, X. Zhang, S. Ren, and J. Sun, “Deep Residual Learning for Image Recognition” In Proceedings of the IEEE conference on computer vision and pattern recognition, Accessed: Jul. 18, 2023. [Online]. Available: http://image-net.org/challenges/LSVRC/2015/

A. Bochkovskiy, C.-Y. Wang, and H.-Y. M. Liao, “YOLOv4: Optimal Speed and Accuracy of Object Detection,” Apr. 2020, Accessed: Jul. 18, 2023. [Online]. Available: https://arxiv.org/abs/2004.10934v1

C.-Y. Wang, H.-Y. M. Liao, I.-H. Yeh, Y.-H. Wu, P.-Y. Chen, and J.-W. Hsieh, “CSPNET: A NEW BACKBONE THAT CAN ENHANCE LEARNING CAPABILITY OF CNN A PREPRINT,” In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops (pp. 390-391) 2019, Accessed: Jul. 18, 2023. [Online]. Available: https://github.com/WongKinYiu/CrossStagePartialNetworks.

G. Huang, Z. Liu, L. Van Der Maaten, and K. Q. Weinberger, “Densely connected convolutional networks,” in Proceedings - 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, 2017. doi: 10.1109/CVPR.2017.243. DOI: https://doi.org/10.1109/CVPR.2017.243

Z. Huang, J. Wang, X. Fu, T. Yu, Y. Guo, and R. Wang, “DC-SPP-YOLO: Dense Connection and Spatial Pyramid Pooling Based YOLO for Object Detection,” China, Information Sciences, 522, 241-258. 2017. [Online]. Available: https://arxiv.org/ftp/arxiv/papers/1903/1903.08589.pdf DOI: https://doi.org/10.1016/j.ins.2020.02.067

Jonathan Hui, “YOLOv4.” , Medium. https://jonathan-hui.medium.com/yolov4-c9901eaa8e61 (accessed Jul. 19, 2023).

P. Y. Chen, J. W. Hsieh, M. Gochoo, C. Y. Wang, and H. Y. M. Liao, “Smaller Object Detection for Real-Time Embedded Traffic Flow Estimation Using Fish-Eye Cameras,” in Proceedings - International Conference on Image Processing, ICIP, IEEE Computer Society, Sep. 2019, pp. 2956–2960. doi: 10.1109/ICIP.2019.8803719. DOI: https://doi.org/10.1109/ICIP.2019.8803719

S. Liu, L. Qi, H. Qin, J. Shi, and J. Jia, “Path Aggregation Network for Instance Segmentation,” in Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2018. doi: 10.1109/CVPR.2018.00913. DOI: https://doi.org/10.1109/CVPR.2018.00913

J. Solawetz, S. S. JUL 1, and 2020 6 Min Read, “Train YOLOv4-tiny on Custom Data - Lightning Fast Object Detection,” Roboflow Blog. 2020.

G. Jocher et al., “ultralytics/yolov5: Initial Release,” Jun. 2020, doi: 10.5281/ZENODO.3908560.

C. Li et al., “YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications,” Sep. 2022, [Online]. Available: http://arxiv.org/abs/2209.02976

Chu Yi et al., “YOLOv6: The fast and accurate target detection framework is open source,” Jun. 23, 2022. https://tech.meituan.com/2022/06/23/yolov6-a-fast-and-accurate-target-detection-framework-is-opening-source.html

Z. Ge, S. Liu, Z. Li, O. Yoshie, and J. Sun, “OTA: Optimal Transport Assignment for Object Detection,” Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 303–312, Mar. 2021, doi: 10.1109/CVPR46437.2021.00037. DOI: https://doi.org/10.1109/CVPR46437.2021.00037

Z. Gevorgyan, “SIoU loss: More powerful learning for bounding box regression.” arXiv preprint (2022) arXiv:2205.12740.

L. Xie et al., “Elan: Towards Generic and Efficient Elastic Training for Deep Learning.” In 2020 IEEE 40th International Conference on Distributed Computing Systems (ICDCS) (pp. 78-88). IEEE. DOI: https://doi.org/10.1109/ICDCS47774.2020.00018

C.-Y. Wang, A. Bochkovskiy, and H.-Y. M. Liao, “YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors,” Jul. 2022, In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 7464-7475) [Online]. Available: http://arxiv.org/abs/2207.02696 DOI: https://doi.org/10.1109/CVPR52729.2023.00721

W.-K. Chen, Linear Networks and Systems (Book style). Belmont, CA: Wadsworth, 1993, pp. 123–135.

H. Poor, An Introduction to Signal Detection and Estimation. New York: Springer-Verlag, 1985, ch. 4.

J. Wang, “Fundamentals of erbium-doped fiber amplifiers arrays (Periodical style—Submitted for publication),” IEEE J. Quantum Electron., submitted for publication.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2023 Saif S. Abood, Prof. Dr. Karim Q. Hussein, Prof. Dr. Methaq T. Gaata

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

Deprecated: json_decode(): Passing null to parameter #1 ($json) of type string is deprecated in /var/www/vhosts/ijser.aliraqia.edu.iq/httpdocs/plugins/generic/citations/CitationsPlugin.inc.php on line 49