Classification of Human Activity Recognition Using Machine Learning on the WISDM Dataset

DOI:

https://doi.org/10.58564/IJSER.3.3.2024.222Keywords:

HAR, CNN, RF, machine learningAbstract

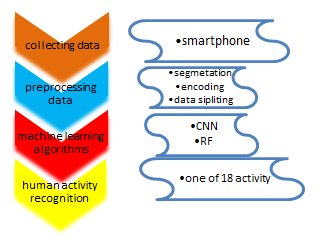

The significance of human activity recognition (HAR) is rising as it seeks to improve everyday life and healthcare through better technology access and efficiency. Its objective is to transform industries by enabling smart homes, improving robots, bolstering security, and improving human-computer interactions. HAR works to improve well-being, which is essential to health, wellness, and sports.

While the complexity of human behavior poses challenges, machine learning advancements offer hope for solutions. Continuous research in accurately detecting a wide array of human activities underscores the significant impact of HAR on technological development and its broad applications.

In this work, a convolution neural network CNN algorithm and random forest RF algorithms were produced for human recognition activity classification using WISDM-51 dataset that contains 18 human activities. The CNN achieved an accuracy of 89.36%, whereas the RF algorithm reached a slightly higher accuracy of 93.46%. The results suggest that the proposed algorithms offer promising potential.

References

L. B. Marinho, A. H. de Souza Junior, and P. P. R. Filho, "A new approach to human activity recognition using machine learning techniques," Adv. Intell. Syst. Comput., vol. 557, no. January, pp. 529–538, 2017, doi: 10.1007/978-3-319-53480-0_52.

W. Z. Tee, R. Dave, N. Seliya, and M. Vanamala, "Human Activity Recognition models using Limited Consumer Device Sensors and Machine Learning," Proc. - 2022 Asia Conf. Algorithms, Comput. Mach. Learn. CACML 2022, pp. 456–461, 2022, doi: 10.1109/CACML55074.2022.00083.

N. G. Nia, E. Kaplanoglu, A. Nasab, and H. Qin, "Human Activity Recognition Using Machine Learning Algorithms Based on IMU Data," BioSMART 2023 - Proc. 5th Int. Conf. Bio-Engineering Smart Technol., no. June, pp. 1–8, 2023, doi: 10.1109/BioSMART58455.2023.10162095.

F. Amjad, M. H. Khan, M. A. Nisar, M. S. Farid, and M. Grzegorzek, "A comparative study of feature selection approaches for human activity recognition using multimodal sensory data," Sensors, vol. 21, no. 7, pp. 1–21, 2021, doi: 10.3390/s21072368.

S. K. Hiremath and T. Plötz, "The Lifespan of Human Activity Recognition Systems for Smart Homes," Sensors, vol. 23, no. 18, 2023, doi: 10.3390/s23187729.

A. -C. Popescu, I. Mocanu and B. Cramariuc, "Fusion Mechanisms for Human Activity Recognition Using Automated Machine Learning," in IEEE Access, vol. 8, pp. 143996-144014, 2020, doi: 10.1109/ACCESS.2020.3013406.

https://dblp.org/rec/journals/access/PopescuMC20

https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=9153764&isnumber=8948470

S. Zhang et al., "Deep Learning in Human Activity Recognition withWearable Sensors: A Review on Advances," Sensors, vol. 22, no. 4, 2022, doi: 10.3390/s22041476.

S. Chung, J. Lim, K. J. Noh, G. Kim, and H. Jeong, "Sensor data acquisition and multimodal sensor fusion for human activity recognition using deep learning," Sensors (Switzerland), vol. 19, no. 7, 2019, doi: 10.3390/s19071716.

Y. Zhao, R. Yang, G. Chevalier, X. Xu, and Z. Zhang, "Deep Residual Bidir-LSTM for Human Activity Recognition Using Wearable Sensors," Math. Probl. Eng., vol. 2018, 2018, doi: 10.1155/2018/7316954.

S. H. H. Shah, A. S. T. Karlsen, M. Solberg, and I. A. Hameed, "An efficient and lightweight multiperson activity recognition framework for robot-assisted healthcare applications," Expert Syst. Appl., vol. 241, no. June 2023, p. 122482, 2024, doi: 10.1016/j.eswa.2023.122482.

D. Thakur, S. Biswas, E. S. L. Ho and S. Chattopadhyay, "ConvAE-LSTM: Convolutional Autoencoder Long Short-Term Memory Network for Smartphone-Based Human Activity Recognition," in IEEE Access, vol. 10, pp. 4137-4156, 2022, doi: 10.1109/ACCESS.2022.3140373.

https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=9668957&isnumber=9668973

A. I. Alexan, A. R. Alexan, and S. Oniga, "Real-Time Machine Learning for Human Activities Recognition Based on Wrist-Worn Wearable Devices," Appl. Sci., vol. 14, no. 1, p. 329, 2023, doi: 10.3390/app14010329.

P. Seelwal, C. Srinivas, and ..., "Human Activity Recognition using WISDM Datasets," J. …, no. December, 2023, doi: 10.52783/joee.v14i1s.92.

D. Parmar, M. Bhardwaj, A. Garg, A. Kapoor, and A. Mishra, "Human activity recognition system," 2023 Int. Conf. Comput. Intell. Commun. Technol. Networking, CICTN 2023, vol. 13, pp. 533–535, 2023, doi: 10.1109/CICTN57981.2023.10141250.

B. Oluwalade, S. Neela, J. Wawira, T. Adejumo, and S. Purkayastha, "Human activity recognition using deep learning models on smartphones and smartwatches sensor data," Heal. 2021 - 14th Int. Conf. Heal. Informatics; Part 14th Int. Jt. Conf. Biomed. Eng. Syst. Technol. BIOSTEC 2021, pp. 645–650, 2021, doi: 10.5220/0010325906450650.

V. S. Devi, K. Sumathi, M. Mahalakshmi, A. J. Anand, A. Titus, and N. N. Saranya, "Machine Learning Based Efficient Human Activity Recognition System," Int. J. Intell. Syst. Appl. Eng., vol. 12, no. 5s, pp. 338–346, 2024.

R. A. Lateef and A. R. Abbas, "Human Activity Recognition using Smartwatch and Smartphone: A Review on Methods, Applications, and Challenges," Iraqi J. Sci., vol. 63, no. 1, pp. 363–379, 2022, doi: 10.24996/ijs.2022.63.1.34.

E. H. Houssein, A. Hammad, and A. A. Ali, Human emotion recognition from EEG-based brain–computer interface using machine learning: a comprehensive review, vol. 34, no. 15. Springer London, 2022.

Y. Li, R. Yin, Y. Kim, and P. Panda, "Efficient human activity recognition with spatio-temporal spiking neural networks," Front. Neurosci., vol. 17, 2023, doi: 10.3389/fnins.2023.1233037.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2024 Sarah W. Abdulmajeed

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

Deprecated: json_decode(): Passing null to parameter #1 ($json) of type string is deprecated in /var/www/vhosts/ijser.aliraqia.edu.iq/httpdocs/plugins/generic/citations/CitationsPlugin.inc.php on line 49